Contents

MRI Compatible Robotics

Magnetic Resonance Imaging (MRI) guided robotic approach has been introduced to improve prostate interventions procedure in terms of accuracy, time and ease of operation. However, the robotic systems introduced so far have shortcomings preventing them from clinical use mainly due to insufficient system integration with MRI and exclusion of clinicians from the procedure by taking autonomous approach in robot design. To overcome problems of such robotic systems, a 4-DOF pneumatically actuated robot was developed for transperineal prostate intervention under MRI guidance in a collaborative project by SPL at Harvard Medical School, Johns Hopkins University, AIM Lab at Worcester Polytechnic Institute, and Laboratory for Percutaneous Surgery at Queens University, Canada. At the moment, we are finalizing pre-clinical experiments toward real patient experiment(s) which will be coming up soon. Also, we are granted funding for another 5 years (till 2015) to develop the third version of the robot which not only addresses shortcomings of the previous versions, but also provides the ability to perform teleoperated needle maneuvering under real-time MRI guidance with haptic feedback. Please keep visiting this website for the latest update!

Eye Robots

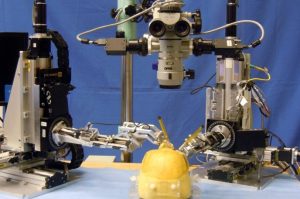

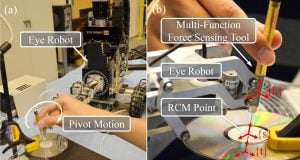

The Steady-Hand Eye Robot is a cooperatively-controlled robot assistant designed for retinal microsurgery. Cooperative control allows the surgeon to have full control of the robot, with his hand movements dictating exactly the movements of the robot. The robot can also be a valuable assistant during the procedure, by setting up virtual fixtures to help protect the patient, and by eliminating physiological tremor in the surgeon’s hand during surgery.

Force Sensing Microsurgical Instruments

Retinal microsurgery requires extremely delicate manipulation of retinal tissue. One of the main technical limitations in vitreoretinal surgery is lack of force sensing since the movements required for dissection are below the surgeon’s sensory threshold.

We are developing force sensing instruments to measure very delicate forces exerted on eye tissue. Fiber optic sensors are incorporated into the tool shaft to sense forces distal to the sclera, to avoid the masking effect of forces between the tools and sclerotomy. We have built 2DoF hook and forceps tools with force resolution of 0.25 mN. 3DoF force sensing instruments are under development.

Force Sensing Microsurgical Instruments Project Page

Image Overlay

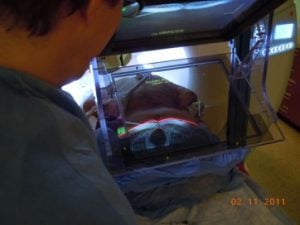

The goal of this project is to provide information assistance to a physician placing a biopsy or therapy needle into a patient’s anatomy in an online intraoperative imaging environment. The project started within the CISST ERC at Johns Hopkins, as a collaboration between JHU and Dr. Ken Masamune, who was visiting from the University of Tokyo. It is now a collaborative project between JHU and the [for Percutaneous Surgery] at Queen’s University. The system combines a display and a semi-transparent mirror so arranged that the virtual image of a cross-sectional image on the display is aligned to the corresponding cross-section through the patient’s body. The physician uses his or her natural eye-hand coordination to place the needle on the target. Although the initial development was done with CT scanners, more recently we have been developing an MR-compatible version.

Image Overlay Project Page at Queen’s University

Small Animal Radiation Research Platform (SARRP)

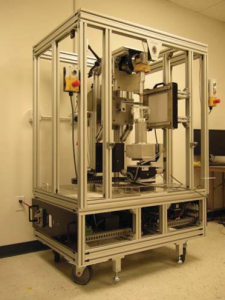

In cancer research, well characterized small animal models of human cancer, such as transgenic mice, have greatly accelerated the pace of development of cancer treatments. The goal of the Small Animal Radiation Research Platform (SARRP) is to make those same models available for the development and evaluation of novel radiation therapies. SARRP can deliver high resolution, sub millimeter, optimally planned conformal radiation with on-board cone-beam CT (CBCT) guidance. SARRP has been licensed to Xstrahl (previously Gulmay Medical) and is described on the Xstrahl [|Small Animal Radiation Research Platform] page. Recent developments include a Treatment Planning System (TPS) module for 3D Slicer that allows the user to specify a treatment plan consisting of x-ray beams and conformal arcs and uses a GPU to quickly compute the resulting dose volume.

Active: 2005 – Present

Robotic Assisted Image-Guided Radiation Therapy

The goal of this project is to construct a robotically-controlled, integrated 3D x-ray and ultrasound imaging system to guide radiation treatment of soft-tissue targets. We are especially interested in registration between the ultrasound images and the CT images (from both the simulator and accelerator), becausethis enables the treatment plan and overall anatomy to be fused with the ultrasound image. However, ultrasound image acquision requires relatively large contact forces between the probe and patient, which leads to tissue deformation. One approach is to apply a deformable (nonrigid) registration between the ultrasound and CT, but this is technically challenging. Our approach is to apply the same tissue deformation during CT image acquisition, thereby removing the need for a non-rigid registration method. We use a model (fake) ultrasound probe to avoid the CT image artifacts that would result from using a real probe. Thus, the requirement for our robotic system is to enable an expert ultrasonographer to place the probe during simulation, record the relevant information (e.g., position and force), and then allow a less experienced person to use the robot system to reproduce this placement (and tissue deformation) during the subsequent fractionated radiotherapy sessions.